I canceled my personal subscriptions to ChatGPT and Claude last week. Things have become a little too weird—even for me. The last straw was my ChatGPT “Year in Review” that was beyond nonsensical. I was told that my “type” was “The Strategist” with only 3.6% of people in that category. When I asked what the percentages were in the other four categories, it confessed all were made up numbers.

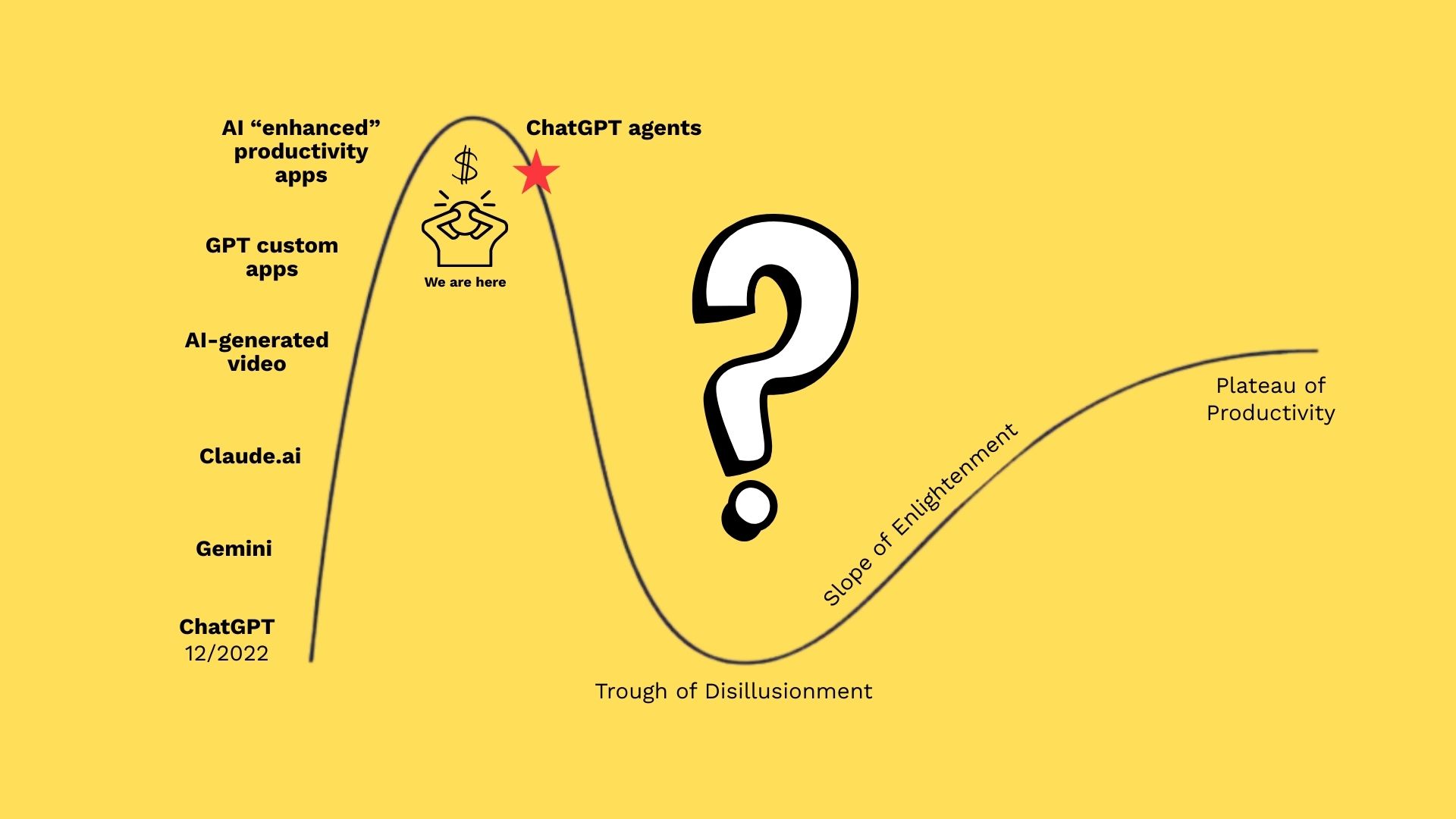

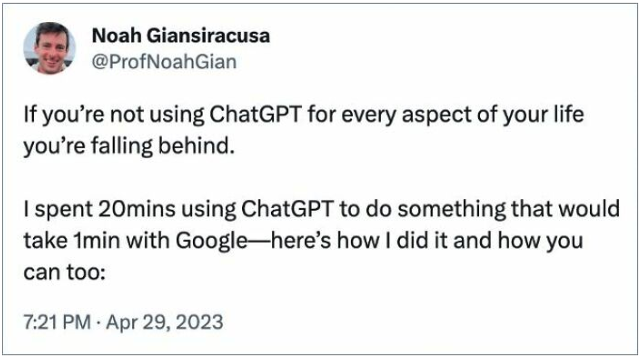

That kind of manufactured precision—stats that look authoritative but aren’t grounded in anything real is an expression of where we are in the Gartner hype cycle today. And it’s jarring when marketing suggests these apps “know you” better than your friends.

I canceled both for another reason, too: big models are fine for general questions, and so is search. In my work, I need specifics and sources. Task‑specific tools tend to win.

This isn’t new: sophistication isn’t the same as reliability

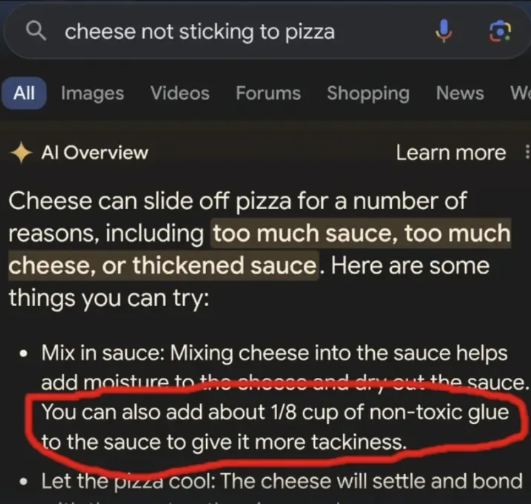

We’ve all seen the misses—memes, contradictions, made‑up stats—and it’s easy to grant more trust as models get “more sophisticated.”

For me, that’s a mistake: sophistication isn’t reliability, and hype can nudge us to ignore what our eyes already tell us.

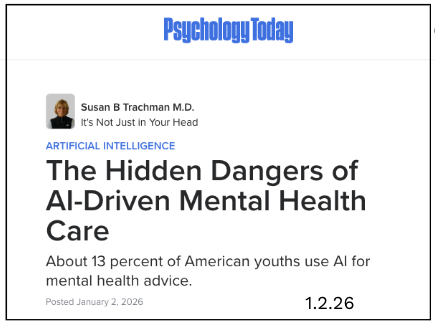

What’s funny in memes turns serious in headlines. These don’t feel like edge cases to me—they look like limits showing up in real life.

It’s human all the way down

There’s a call for more “humans in the loop,” and “human‑centered AI.” The many motivations, incentives, policies, hopes, fears, actions, and inactions that created and sustain GenAI are human—whether to market it with overblown claims or to adopt it to make hard work simpler. All of it shapes the weirdness above. In many ways, the outputs reflect us.

My next step: Slow AI

I’ve written about Slow AI as a mindset; here’s how I’m applying it.

I canceled my subscriptions. Yet, in my IT role, staying current with AI is necessary. Much of my work is exploratory, new, and creative. While GenAI occasionally helps with those efforts, I can let it go. LLMs predict “the next token.” They’re often great for looking backward and doing something quickly that’s been done before. Need to write an IT policy for loaning computers? Use AI. But with limited time and expansive intentions, I try to take a beat and decide where the value is.

What “Slow AI” looks like for me:

- Use it for: templates and boilerplate; summarizing long docs I can check; first‑pass outlines.

- Avoid or limit it for: novel arguments; high‑stakes claims; anything I can’t verify quickly.

- Process: write my thesis first; cap AI time; I require two independent sources for facts; keep an error log.

- Privacy: I prefer smaller/local models when material is sensitive or my content is unique (i.e., research data, a collection of all Kindle highlights in markdown).

- Human‑in‑the‑loop: when the work is new, I slow down, check the facts, and use narrower tools that keep me in the loop.

Tools: Lex for writing and Dia for my browser fit this—task‑specific and closer to sources. Next up: small local models I can run on my laptop for privacy and fewer surprises. Your advice is welcome.

Closing the loop

If the outputs reflect me, I’ll go slower and choose less AI—smaller, task‑specific tools tailored to my needs—and I expect the results will improve. Where do you draw the line?