Gallup’s recent report with SCSP—Reward, Risk, and Regulation: American Attitudes Toward Artificial Intelligence—offers clear insight into how trust and use are evolving. Below, I’ve distilled a few key findings and translated them into practical steps for organizations.

At a Glance

The big picture: Leaders who get their teams using AI at work—not just talking about it—will win the next phase of digital transformation.

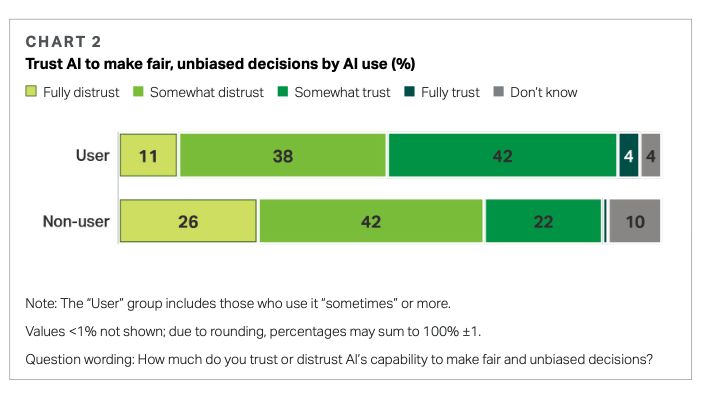

Why it matters: Gallup–SCSP finds near-universal awareness (98%), yet only about one in four Americans use AI in education or work (26%); overall, 39% use AI “sometimes or more.” But here’s the key insight: People who use AI are twice as likely to trust it to make fair, unbiased decisions compared to non-users (46% vs 23%).

Between the lines: This trust gap, documented in the report, isn’t just about adoption—it’s about developing confidence through direct experience. For leaders ready to move beyond AI awareness to actual transformation, this presents a clear opportunity to get ahead. And the trend line is clear: workplace use has nearly doubled in two years, underscoring the urgency to build disciplined capability now.

Leading Through the Trust Divide

The trust data reveals both a risk and an opportunity. Gallup–SCSP reports that nearly one-third of Americans trust AI to make fair, unbiased decisions—a concerning statistic given the technology’s current limitations. Yet complete skepticism is equally problematic. The leadership challenge? Guide teams toward informed trust through practical, real-world experience that reveals both AI’s capabilities and constraints.

This presents a clear mandate: develop structured learning programs that build real confidence, not blind faith. The data support this approach—regular AI users are significantly more likely to view AI as “very important” to the future of the U.S. (70%) compared to non-users (45%). More importantly, they develop better judgment about where and how to apply AI effectively.

The key insight for leaders: Active AI use doesn’t just build trust—it teaches discernment: knowing when, where, and how to rely on AI. Hands-on practice teaches teams when to trust AI and when to be cautious.

Look for three things:

- Reliability: Where did the information come from? Is it consistent? Does the tool show confidence or cite sources?

- Context fit: Does the output match your audience, standards, and compliance needs?

- Risk level: What happens if it’s wrong? Is this low stakes or high stakes?

Then make a simple call:

- Use it as-is for low-risk, high-volume work (drafts, summaries, formatting).

- Refine it when it’s close but needs expert review and edits.

- Reject it when the stakes are high or the output fails your checks.

This keeps AI where it’s strongest—speeding routine tasks—while people keep ownership of judgment and final decisions.

From Analysis to Action

Your organization faces a stark choice: lead or lag. Gallup–SCSP finds strong public support for workforce training and education programs to develop or use AI (72%), while many organizations remain stuck without a clear vision or strategy. The result? Teams face unnecessary barriers, including unclear usage policies, limited access to tools, and a lack of structured learning paths.

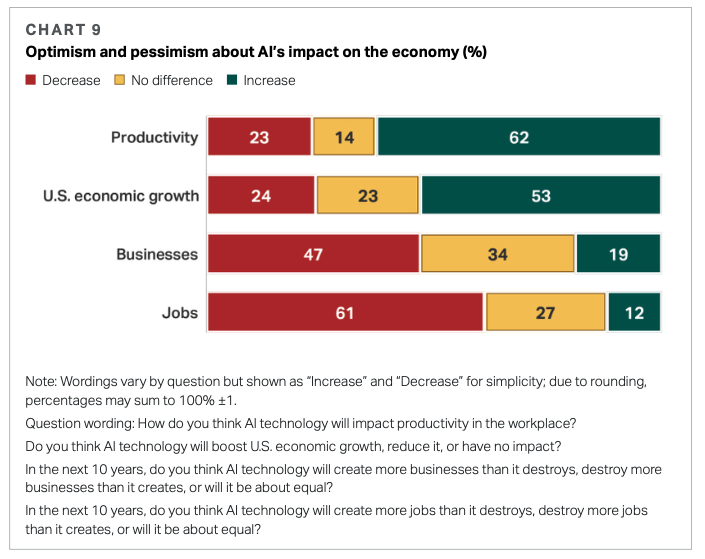

The stakes keep rising: Most Americans expect AI to boost productivity (62%) and economic growth (53%), while 61% believe AI will destroy more jobs than it creates. That creates a classic tension: some leaders wait and stall; others rush and scatter. Waiting means teams don’t practice or prepare. Rushing creates tool sprawl and shallow pilots. The strategic path is clear: pick a few high‑fit workflows, integrate them well, and measure business results—a pattern the MIT NANDA State of AI in Business 2025 report documents.

The implication: While everyone else is stuck in analysis paralysis, you have a window to figure this out and get it right.

Practical Steps to Build Trust and ROI

Ready to move strategically while others wait? Here’s how to build momentum:

- Start Where You’ll Win: Choose one or two projects where your teams can quickly prove AI’s value—think routine tasks they already do well.

- Lead from Strength: Target areas where your team’s expertise gives them natural authority to evaluate AI’s work.

- Establish Clear Rules: Set specific guidelines for where AI supports versus where humans must decide. State what data AI can access, when human review is required, and how it’s logged.

- Build Visible Momentum: Celebrate early wins publicly and use them to engage staff and identify where to scale next.

The key to success is to start now and to start smart. Your teams will develop confidence through direct experience, and you’ll learn what works for your specific organization.

Turn Trust into Action. Turn Practice into a Movement.

Experience with AI compounds. Start a simple system today and you’ll set the pace instead of playing catch-up. Each team’s successful interaction creates momentum, sparking a positive feedback loop that expands capabilities and generates new opportunities. As confidence spreads across the organization, you’re not just adopting technology—you’re building a movement of people who think boldly and act experimentally. Together, they’ll tackle any challenge, seize every opportunity, and lead your organization into an era of unprecedented innovation.

Ready to lead this transformation? Already started? What’s your first strategic AI win?

Sources

- Gallup & Special Competitive Studies Project (SCSP). “Reward, Risk, and Regulation: American Attitudes Toward Artificial Intelligence.” Fielded Apr 25–May 5, 2025. Copyright 2025 Gallup, Inc. https://www.gallup.com/analytics/695033/american-ai-attitudes.aspx

- Pendell, R. (2025, June 16) “AI use at work has nearly doubled in two years.” Gallup. https://www.gallup.com/workplace/691643/work-nearly-doubled-two-years.aspx

- MIT NANDA State of AI in Business 2025. https://mlq.ai/media/quarterly_decks/v0.1_State_of_AI_in_Business_2025_Report.pdf